Core Concepts

Learn about data and Empower

Data is the raw information that drives decision-making. It is the fuel for artificial intelligence and analytics, and it what allows innovation.

Every company has data.

But data is stored all over the place. Companies use some systems to store data for human resources and use others for finance, supply chain, or even compliance.

Specialized applications are extremely useful, and often downright necessary. An unfortunate side effect of these disparate systems is that they create Data Silos. These silos prevent companies from obtaining a single-source-of-truth about their data estate.

That's why we built Empower.

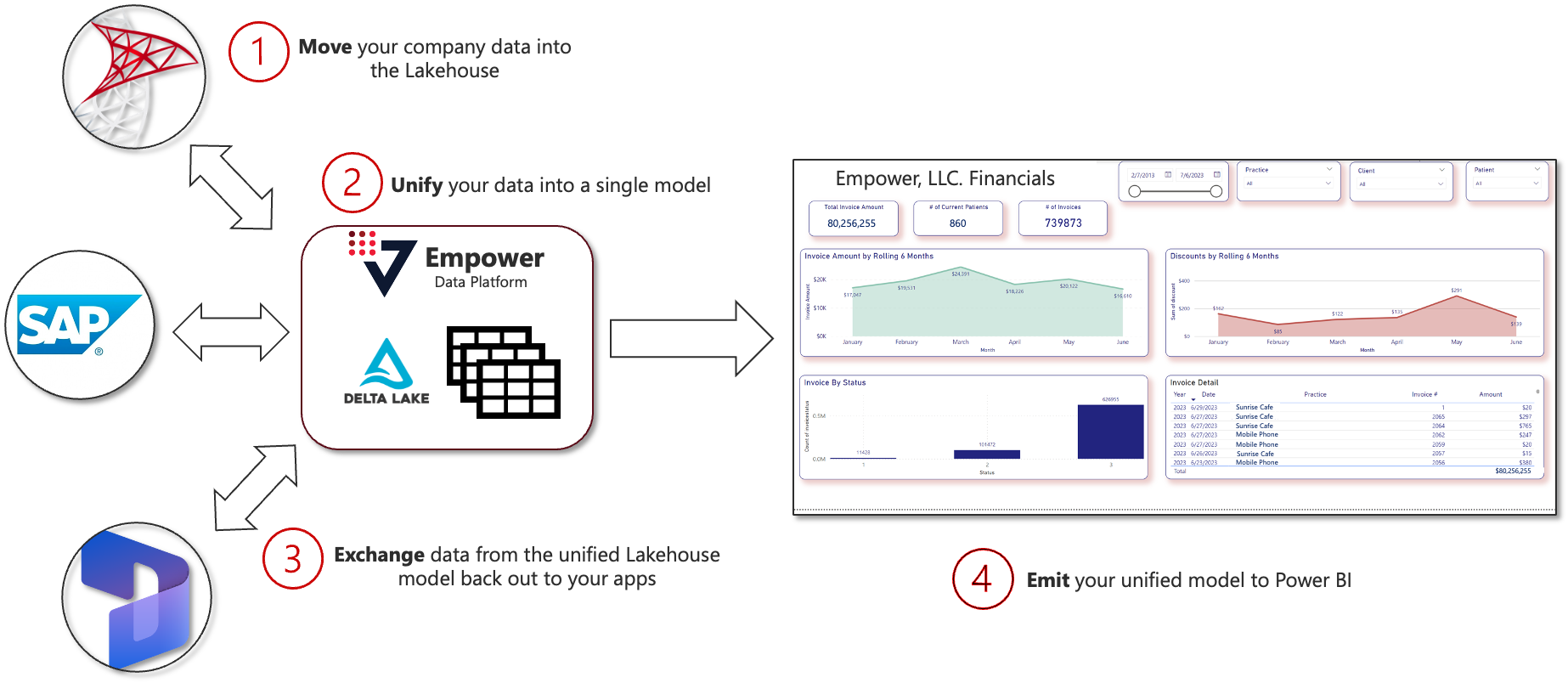

Empower enables you to keep using your tools and generate business value across systems. Using a Data Lakehouse as a staging ground, Empower enables you to create a unified data model for your company.

Simply data management with Empower's Lakehouse centralization strategy.

Data Categories

Not all data is alike.

Depending on its format and organization, it can be categorized:

- Structured Data: Data that adheres to a fixed schema or model. It's organized and easy to search, typically stored in relational databases or spreadsheets.

- Semi-Structured Data: Data that does not conform to the rigid structure of data models but it does contain tags or other markers to separate data elements and enforce hierarchies, e.g. XML and JSON files.

- Unstructured Data: Data that doesn't have a specific form or model. Often it can be text-heavy, like word documents or PDFs, but can also include images, videos, and social media posts.

ETL vs ELT

ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) are two approaches to moving data.

- ETL: Data is Extracted from a data origin, Transformed into a suitable format, and then Loaded into a data destination. This approach is best when the transformations are complex and when the quality and cleanliness of data are paramount.

- ELT: Data is Extracted from a data origin, Loaded into a data destination, and then Transformed. This approach is best when dealing with huge volumes of data, as it leverages the power of modern data warehouses to perform transformations.

Until recently business analytics relied on fragile ETL pipelines. A mismatch of compute and storage power required data to be transformed mid-flight, from business system to business system.

This does not scale well in the age of Big Data!

With the technological advances of today and the advent of cloud services like Azure, it is now possible to extract, load, and then transform your data at scale. Empower utilizes ELT by leveraging powerful compute structures to bring your data into the Lakehouse before transforming it.

Data Warehouses, Data Lakes, and Data Lakehouses

"After extracting my data, where should I load my data?"

You have three choices:

- Data Warehouse: Large repository used for storing structured data from multiple sources in a way that facilitates analysis and reporting. Uses a schema-on-write approach, meaning the schema is defined before writing into the warehouse.

- Data Lake: Storage repository that holds vast amounts of raw data in its native format until it is needed. It stores all types of data (structured, semistructured, and unstructured) and use a schema-on-read approach, meaning the schema is only defined when reading data.

- Data Lakehouse: A strikingly new paradigm that combines the best elements of data lakes and data warehouses. It supports all types of data and offers the performance of a data warehouse with the flexibility of a data lake.

The Lakehouse can stored all three types of data: structured, unstructured, and semi-structured while still providing the powerful scaling ability of a warehouse. At its core, Empower uses the Lakehouse to centralize your data before any transformation occurs.

Medallion Architecture

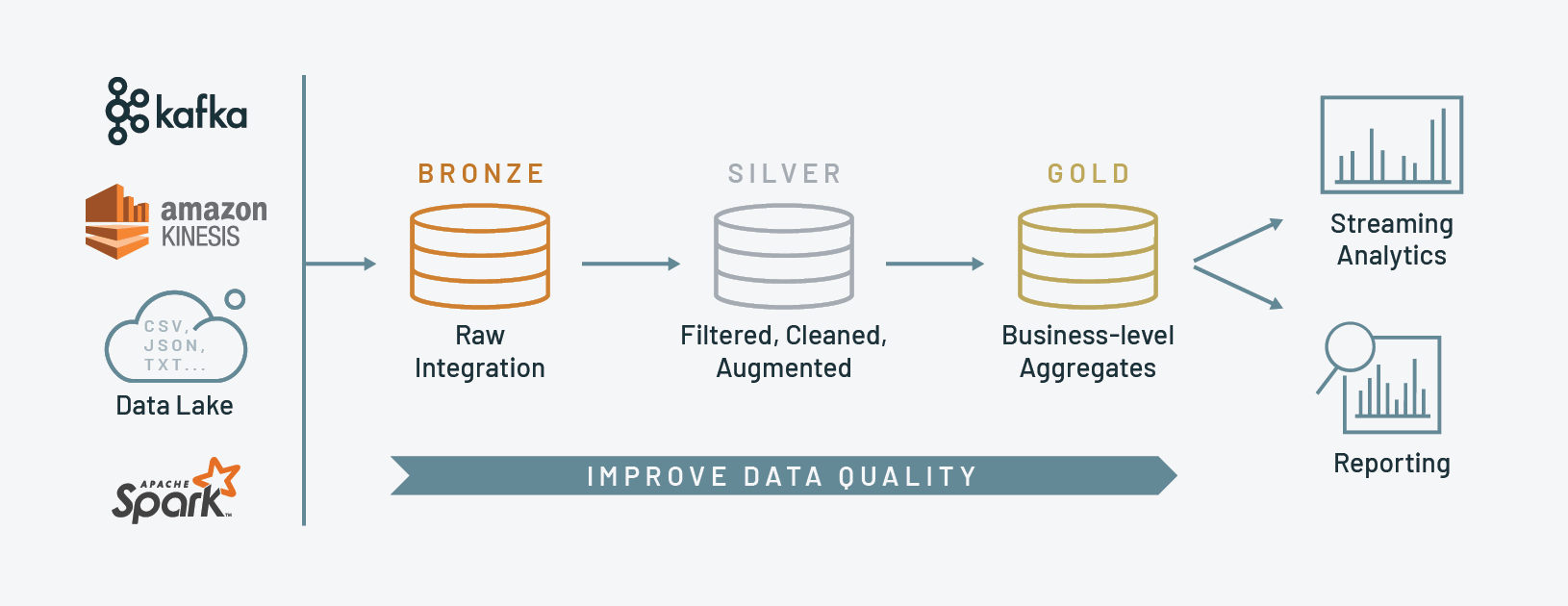

Empower abides by the data management paradigm known as the Medallion Architecture. Medallion focuses on enabling rapid, reliable, and scalable data pipelines, while providing robust data governance and quality checks.

Data quality improves as it moves from left (Bronze) to right (Gold)

The Medallion Architecture is typically represented as a series of layers or stages, each with a specific role in data processing. Here's a simplified representation:

- Bronze Layer: The "raw" or "ingest" layer, where data is ingested from various sources in its original, unprocessed form. The goal is to capture a complete and accurate representation of the source data.

- Silver Layer: The "clean" or "trusted" layer, where data is processed, cleaned, and transformed. Quality checks and data governance rules are applied in this layer to ensure the data is accurate, reliable, and ready for analysis.

- Gold Layer: The "semantic" or "consumption" layer, where data is further transformed and enriched into a format suitable for consumption by end-users (like business analysts) or applications (like BI tools). The goal is to create a user-friendly, high-value dataset that drives insights and decision-making.

Deployment Hierarchies

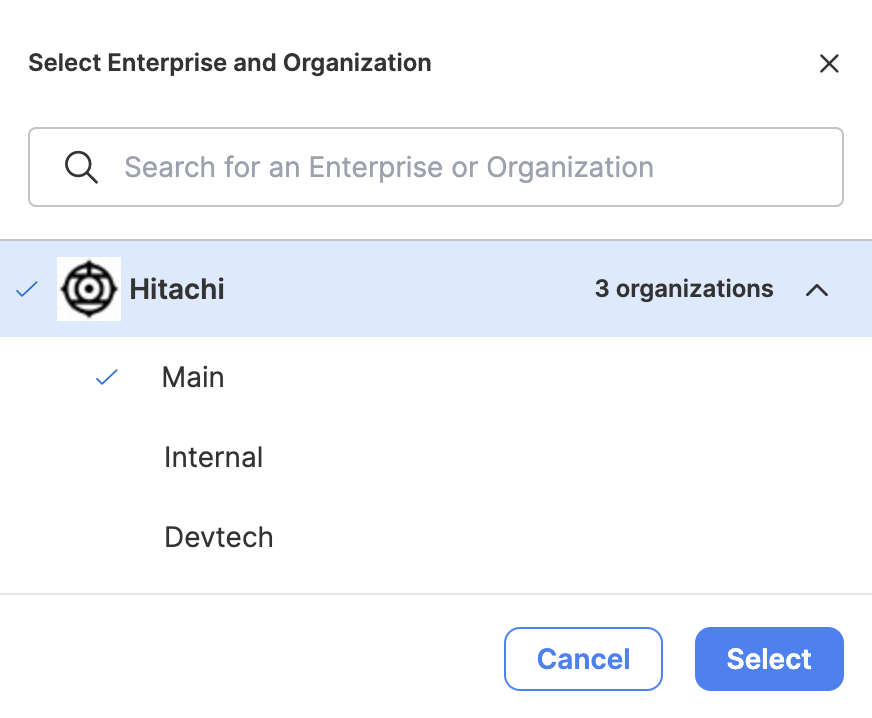

Empower deployments are designed into a three-tiered system. Each level contains its own granular set of RBAC permissions and inherits permissions from its parent.

- Enterprise: the highest tier, in which a single SSO provider is defined.

- Organization: the middle tier, belonging to an Enterprise. Typically used to delineate physical location, permissions, or even separate contracts within an Enterprise.

- Environment: the lowest tier, belonging to an organization. Typically used to define development, stage, and production configurations for rapid iteration on new configuration work.

Hitachi (Enterprise) contains three Organizations.

Main (Organization) contains three Environments.

Empower Data Lingo

Data Flows represent the movement of data across the data estate. Within Empower, flows can be categorized into Data Acquisition, Analytics Engineering, or Data Publishing:

-

Data Acquisition Flows ingest data from sources. All of Empower’s connectors support automatic schema drift and are designed to be straightforward: you configure which data, and the tool handles the mechanics of extraction.

-

Analytics Engineering Flows transform the data into usable information within the Lakehouse. As a user, you may customize these transformations to your heart's content: Empower supports notebook execution. All you need to do is define the data transformation logic as SQL statements.

-

Data Publishing Flows writes data to external systems, such as Power BI, SQL servers, SFTP systems, and Dynamics CE or F&O.

Updated 4 months ago